Over the past 4 months we have had 2 interns, Javed Khan and Michalis Kargakis, work on creating a system for simulating high transaction volumes with Bitcoin called btcsim. Since there has been and is currently a great deal of attention being paid to the issues surrounding mining incentives and block propagation, we figured that it would be interesting to investigate the less-discussed topic of high transaction volumes. There are a variety of claims about how the Bitcoin network will behave at transaction volumes approaching those of major credit card companies, e.g. 3,000 transactions per second (“tps”), so we used btcsim to put btcd and btcwallet to the test. After simulating the creation of blocks up to 32 MB in size, we have arrived at some interesting conclusions:

- a 32 MB block, when filled with simple P2PKH transactions, can hold approximately 167,000 transactions, which, assuming a block is mined every 10 minutes, translates to approximately 270 tps

- a single machine acting as a full node takes approximately 10 minutes to verify and process a 32 MB block, meaning that a 32 MB block size is near the maximum one could expect to handle with 1 machine acting as a full node

- a CPU profile of the time spent processing a 32 MB block by a full node is dominated by ECDSA signature verification, meaning that with the current infrastructure and computer hardware, scaling above 300 tps would require a clustered full node where ECDSA signature checking is load balanced across multiple machines.

While the current mainnet rate of transactions (0.9 tps) are much lower than those tested using btcsim, this paints a relatively rosy picture for Bitcoin should the block size be allowed to increase.

btcsim

btcsim is a tool used to simulate the growth of a blockchain, where a user can specify the block sizes. Transactions are simulated by a variable number of wallet agents or “actors” which act independent of each other, similar to real-life btcwallet users. Mining is controlled to produce blocks with the exact number of transactions as given in the input. Stats, currently limited to Average Number of Transactions per Second (“tps”) and Maximum Number of Transactions per Block (“tpb”), are collected throughout the simulation and reported at the end.

Simulations take a CSV file as input, where each line has the following form:

20000,60000,30000

A CSV file with this single line will generate a blockchain which, with the block at height 20,000 will contain 30,000 transactions created from 60,000 unspent transaction outputs (“UTXOs”) which were made available before mining the block. Multiple lines will lead to a series of blocks being generated with the specified UTXO and transaction counts.

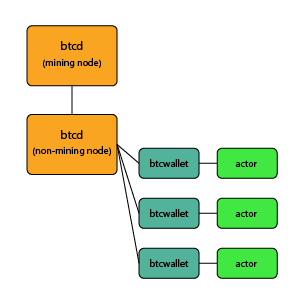

btcsim architecture

btcsim requires a few components to run a simulation. First, a btcd node is launched which serves as a common node for the actors. Based on the command line flag ‘-actors’, multiple actors, each of which is a btcwallet node, are launched and configured to connect to the common btcd node. Since mining requires at least one peer, a second btcd node, the miner, is launched with mining addresses set to one address from each actor. This provides each actor with enough UTXOs to bootstrap the simulation. In case the number of input transactions is large and the the number of UTXOs are not sufficient, the existing UTXOs are ‘divided’ by creating transactions with a pre-calucated number of outputs per transaction to the actor’s own addresses. Once the minimum required number of UTXOs are available, transactions are simulated. Each transaction involves dequeuing a UTXO from the queue of available UTXOs, creating a raw transaction and sending it to a random address from among all the actors’ addresses.

btcsim requires a few components to run a simulation. First, a btcd node is launched which serves as a common node for the actors. Based on the command line flag ‘-actors’, multiple actors, each of which is a btcwallet node, are launched and configured to connect to the common btcd node. Since mining requires at least one peer, a second btcd node, the miner, is launched with mining addresses set to one address from each actor. This provides each actor with enough UTXOs to bootstrap the simulation. In case the number of input transactions is large and the the number of UTXOs are not sufficient, the existing UTXOs are ‘divided’ by creating transactions with a pre-calucated number of outputs per transaction to the actor’s own addresses. Once the minimum required number of UTXOs are available, transactions are simulated. Each transaction involves dequeuing a UTXO from the queue of available UTXOs, creating a raw transaction and sending it to a random address from among all the actors’ addresses.

This architecture enables the simulation of a blockchain with very large block sizes (up to 32MB), starting at a small block height with a minimal set of components involved.

Bugs squashed

Along the way we came across some issues in btcd and btcwallet which needed to be fixed. Some of these issues are listed below:

- btcd – fixed bug in notification queue

- btcd, btcwallet – fixed corner cases in graceful interrupt handling

- btcwallet – handle disconnected clients

- btcd – updated to handle –simnet flag

- btcd – fixed bug in the rate limiter

- btcjson – don’t unmarshal error responses

For easier automated testing of graceful interrupt handlers Javed wrote a tiny script called annyong which randomly interrupts any suffixed commands and reports failures.

Simulating large (>1 MB) blocks

In order to simulate large blocks, one has to modify btcwire, rebuild btcd, and set blockmaxsize on the command line. Instructions on how to do this are in the wiki linked in the previous sentence. With these minimal modifications, one can simulate blocks that are substantially larger than the current hard maximum size of 1 MB. We chose to simulate up to 32 MB blocks, which, coincidentally, ended up being a good place to stop.

The most expedient way to simulate a very large block is to make a CSV file with a single line as follows:

16200,200000,180000

This will create 200,000 UTXOs, create 180,000 transactions, and then mine the next block. The process takes 2-4 hours depending on hardware, likely much less with proper mining hardware (this has all been simulated using CPU mining). A 32 MB block can only hold approximately 167,000 simple P2PKH transactions, so the remaining transactions will sit in the mempool. Assuming the average block time is 10 minutes, this translates to an average of 267 tps, well above the current 0.9 tps on mainnet.

Additionally, a profile of the CPU usage of this node, using golang’s great profile capabilities, shows that the CPU usage is dominated by the ECDSA signature verifications. This is consistent with observations from the initial block download using btcd, namely that after the final checkpoint the additional requirement of performing ECDSA signature verifications slows down the chain synchronization by a factor of 7-10 and drives up CPU usage by a factor of 5. It is worth noting that btcec, our elliptic curve math package, is optimized and faster than OpenSSL when performing operations over secp256k1.

Conclusions

Aside from the obvious network and storage constraints of running a full Bitcoin node at large block sizes, it appears the Bitcoin network is capable of handling a substantially higher transaction volume than it does currently. The CPU time being dominated by ECDSA signature checks at high transaction rates suggests a clustered full node architecture could process credit-card-like transaction rates by using a load balancing / offload approach to ECDSA signature checking, e.g. a full node with a 10 machine cluster would top out at >2,000 tps.

The resources and know-how required to run a clustered node like this may impose a significant centralizing force on Bitcoin. Backpressure against the centralization of Bitcoin may well drive alternative solutions to having all transactions on-chain. Alternatively, it may end up that Bitcoin adoption grows slowly enough that the computing power of a single node grows quickly enough to avoid requiring a clustered full node architecture.

Do note that btcsim does nothing to address the current issues with block propagation time and block size. These are serious issues that are already being addressed by Gavin and the other Core developers.

We hope btcsim will prove useful both as a behavioral testing tool and as a source of benchmarks for measuring the performance of various parts of the Bitcoin network. Some initial work has been done to get bitcoind to work with btcsim and large blocks, but it is incomplete. If you are interested in helping to get btcsim working with the Core client or have any other question about btcsim, feel free to contact us on the btcsim GitHub repository or IRC.

I would like to thank Javed Khan for coauthoring this post with me.

Correct me if I’m wrong, but at the moment the transaction validation that occurs when checking a block is performed in a sequential single-threaded manner. Shouldn’t it be possible to parallelize this operation to make use of multiple CPU cores?

Hi Jameson,

The transaction validation of signatures is multi-threaded. See https://github.com/conformal/btcchain/blob/master/scriptval.go#L124-L144 for the code in question that splits them up.

Are any further optimization of btcec possible?

How hard would it be to test how well ed25519 signatures compare to secp256k1?

https://bitcointalk.org/index.php?topic=103172.0

There are two pull requests outstanding that I haven’t reviewed yet because it’s pretty hairy on the math and I want to be absolutely certain they don’t have corners.

Testing ed25519 is a lot more problematic because btcec is highly specialized for the specific curve, so it’s non-trivial to add another one. Also, the serialization and deserialization of the public keys and signatures would vary and there is a lot of code in Bitcoin in general that relies on the size of those things (i.e standard transactions and such).

What were the specs of the machine that capped out at 6 32MB blocks per hour?

I second the machine question.

My 3Ghz Pentium D running Bitcoin 0.8.x on FreeBSD 9.2 was able to process ~20GB of block-chain while using 30 hours of CPU time (as reported by top after completion). Wall time was about 20 hours.

That works out to just under 2 minutes for 20MB of block-chain data. I was using 3.3GB of RAM and a 60GB kingston SSD Now! SSD.

Would address re-use scew the results?